[Repost from blopig] Automated testing with doctest

Another blog post from OPIG awhile ago on automated testing using the doctest module in Python:

PhD in Bioinformatics, Scientist in Antibody Development

Another blog post from OPIG awhile ago on automated testing using the doctest module in Python:

24/9/2018 (Mon)

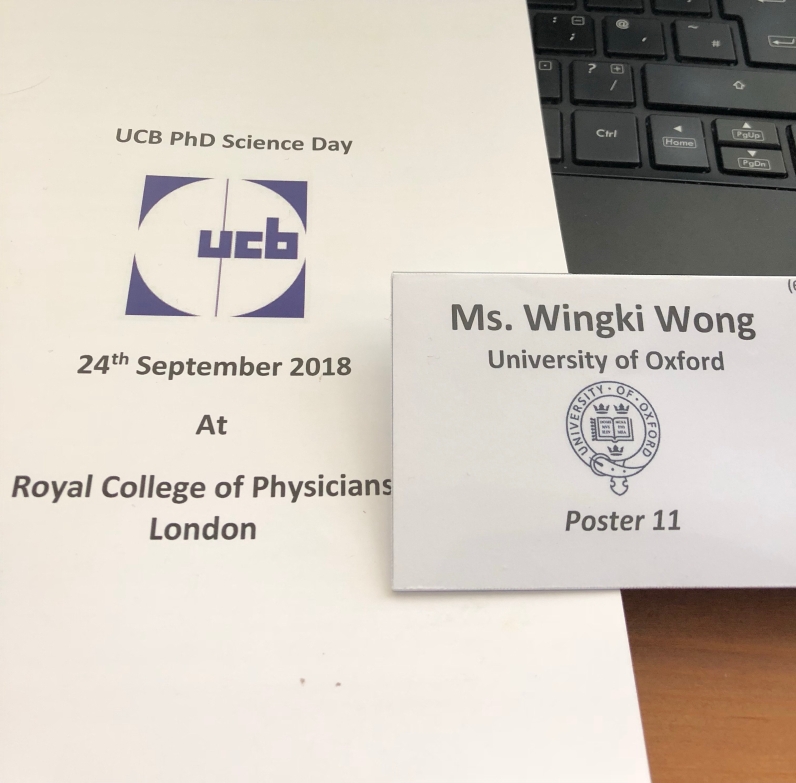

Students sponsored by the UCB Pharma were summoned in London to present our research to scientists and fellow PhD students. It was a fruitful day talking to a number of fellow students from the same and different institutions who work on similar areas, but would otherwise not met because of the difference in approaches – experimental and computational studies.

Booklet and name tag

Earlier I wrote a blog post for OPIG:

Here I’m attaching the codes that I used:

using namespace std;

void save(map const& obj, string const& fname) {

std::ofstream ofs(fname, std::ios::binary);

{

boost::iostreams::filtering_ostreambuf fos;

// push the ofstream and the compressor

fos.push(boost::iostreams::zlib_compressor(boost::iostreams::zlib::best_compression));

fos.push(ofs);

// start the archive on the filtering buffer:

boost::archive::binary_oarchive bo(fos);

bo <> obj;

return obj;

}

}